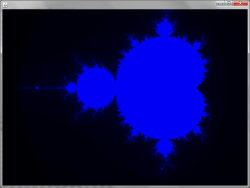

The Rootbeer toolkit promises to run (almost) any Java code on the GPU. Sounds good, so I took a look, and tried a simple example, namely calculating a visualization of the well-known Mandelbrot set on the GPU using Rootbeer.

In short words – after some initial setup effort, it works nicely.

But on my machine (a Lenovo T520 laptop with a NVIDIA NVS 4200M) it’s slower than doing the same calculation on a single CPU core. I won’t blame Rootbeer, as this is only a business laptop that’s not even got a good rating for gaming performance.

There’s now hardware which is by the orders of magnitude faster, on you which can expect better results. The other thing is that general Java code will be translated to, well, general CUDA code, not taking advantage of built-in functions.

As the rootbeer test suite also doesn’t run without errors on my machine, the speed is probably not very representative. Nevertheless, it was an interesting experience trying out Rootbeer (especially given that I’ve had no previous GPU computing experience).

Here’s how you can, too.

Initial Setup

Voila the process of how you can get set up and running (the version numbers are the versions I used, other may work as well):

- Install Cuda Toolkit 4.2

- Install Cuda Drivers 4.2. If you don’t install these, you’ll get link errors while loading the CUDA dlls (cudaruntime_x64.dll in my 64 bit case)

- Install Visual Studio Express Edition for C++ 2010

- If you have a 64 bit machine, there’s an additional step. Express Edition brings along only 32 bit compilers, you will also need to install the Windows SDK, (7.1) http://msdn.microsoft.com/en-us/windowsserver/bb980924.aspx and warp the build a bit by creating the file C:\Program Files (x86)\Microsoft Visual Studio 10.0\VC\bin\amd64\vcvars64.bat.

With the following content

CALL “C:\Program Files\Microsoft SDKs\Windows\v7.1\Bin\SetEnv.cmd” /x64

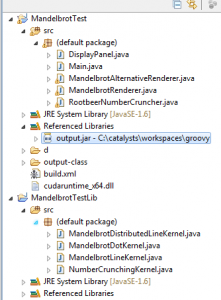

(see issue in the issue tracker or also Stackoverflow) - Implement your Kernel, build and application to run Rootbeer, and follow the instructions on the Rootbeer toolkit (take a look in the doc folder). If things work already then, fine. Otherwise, the following hints may be helpful.

- cicc.exe not found – This problem seems to be NVIDIAs fault, there’s an error “command “cicc” could not be found”. The solution is to copy the file C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v4.2\nvvm\cicc.exe to C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v4.2\bin

- copy .cubin files to proper location – When trying to run a roobeer’ed jar file, there was the error “not found: output-class/MandelbrotLineKernelGcObjectVisitor.cubin” (MandelbrotLineKernel is the name of the Kernel class that is my GPU-runnable). You can work around this by extracting this file from the generated jar file and putting it in the looked-for path in the execution directory.

- If you’re seeing exceptions like “cannot find class: edu.syr.pcpratts.rootbeer.runtime.remap.java.lang.Math” when trying to rootbeer your jar, try running the rootbeer test suite in the same location, it generates some additional folders that seem to be needed.

That’s it, the GPU should be busy now (in my case, rendering the Mandelbrot Set, see the code below).

Source Code

There’s the core algorithm that performs the calculation (on the GPU). Nothing special, just shoveling in the necessary data in the constructor, and then performing the standard Mandelbrot calculation, inspired by Wikipedia.

WP-SYNHIGHLIGHT PLUGIN: NOTHING TO HIGHLIGHT! PLEASE READ README.TXT IN PLUGIN FOLDER!

//Calculates one scan line of the Mandelbrot image

public class MandelbrotLineKernel implements Kernel {

//these will be available for the calculation

int width;//image width

int height;//image height

int[] data;//result - one vertical scan line

int column;//index of the column for the scan line

float scale;//zoom factor into the image

float offsetX;//x offset for panning around

float offsetY;//y offset for panning around

int maxIteration;//max iterations trying to escape the set

public MandelbrotLineKernel(int width, int height, int[] result, int column,

double scale, double offsetX, double offsetY, int maxIteration) {

super();

this.width = width;

this.height = height;

this.data = result;

this.column = column;

this.scale = (float)scale;

this.offsetX = (float)offsetX;

this.offsetY = (float)offsetY;

this.maxIteration = maxIteration;

}

//will be translated to CUDA and run on the GPU

public void gpuMethod() {

//standard Mandelbrot set algorithm

double x0 = column / scale + offsetX;

for (int j = 0; j < height; j++) {

double y0 = j / scale + offsetY;

int k = 0;

double x = 0, y = 0;

while (x*x+y*y < 4 && k < maxIteration)

{

double xtemp = x*x - y*y + x0;

y = 2*x*y + y0;

x = xtemp;

k++;

}

data[j] = k;

}

}

}

[/codesyntax]

and there's the supporting code that splits the calculation into the scan line portions, submits the jobs to Rootbeer, and assembles the scan lines into the final result.

[codesyntax lang="java"]

public class MandelbrotRenderer {

private double scale = 250;

private double offsetX = -2.2;

private double offsetY = -1;

private int maxIteration;

private Rootbeer rootbeer;

private Rootbeer getRootbeer()

{

//lazily initialize (constructor won't work as this class is

//constructed in startup thread, rendering is done in ui thread)

if (rootbeer == null)

{

rootbeer = new Rootbeer();

}

return rootbeer;

}

public int[] calculateMandelbrotSet(final int width, final int height) {

maxIteration = (int)(scale / 2);

final List

final List

//create per vertical scan line kernels, and keep track of the future result data

for (int columnIndex = 0; columnIndex < width; columnIndex++) {

final int[] column = new int[height];

jobs.add(createColumnForCalculation(width, height, column, columnIndex));

calculatedColumns.add(column);

}

runOnRootbeer(jobs);

return aggregateResultColumnsToEntireData(width, height,

calculatedColumns);

}

private void runOnRootbeer(final List

long t = System.currentTimeMillis();

getRootbeer().runAll(jobs);

System.out.println("Rootbeer running of " + jobs.size() + " jobs took " + (System.currentTimeMillis() - t));

}

private MandelbrotLineKernel createColumnForCalculation(int width, int height, int[] data, int column) {

return new MandelbrotLineKernel(width, height, data, column, scale, offsetX, offsetY, maxIteration);

}

private int[] aggregateResultColumnsToEntireData(final int width, Along the way while playing around and trying to tweak performance, I came across the following findings As said above, that's just for a business laptop, not even good for decent game graphics. It's probably not exagerated to expect a hundredfold improvement on better hardware.

final int height, List

final int[] data = new int[width * height];

int i = 0;

for (int[] column : calculatedColumns) {

for (int j = 0; j< column.length; j++)

{

data[i + width * j] = column[j];

}

i++;

}

return data;

}

}

[/codesyntax]

That's it - add the additional boilerplate code below for drawing in a Swing panel, and there's the Mandelbrot Set.

[codesyntax lang="java"]

public class DisplayPanel extends JPanel{

private MandelbrotRenderer renderer = new MandelbrotRenderer();

@Override

public void paint(Graphics g) {

final Graphics2D g2 = (Graphics2D) g;

final Rectangle clipBounds = g2.getClipBounds();

int width = clipBounds.width;

int height = clipBounds.height;

long time = System.currentTimeMillis();

final int[] data = renderer.calculateMandelbrotSet(width, height);

System.out.println("Overall rendering time " + (System.currentTimeMillis() - time));

drawImage(g2, clipBounds, width, height, data);

}

private void drawImage(final Graphics2D g2, final Rectangle clipBounds,

int width, int height, final int[] data) {

final BufferedImage image = new BufferedImage(width, height, BufferedImage.TYPE_INT_RGB);

for (int i = 0; i < data.length; i++) {

int value = data[i];

image.setRGB(i % width, i / width, (value * 4));

}

g2.drawImage(image, clipBounds.x, clipBounds.y, null);

}

}

[/codesyntax]

Sounds too easy to be true? No GPU knowledge, no CUDA programming, just plain old Java?

Try it yourself 😉

Results and Lessons Learned

Working with Rootbeer

GPU computing

Performance

Nice article!

I have created a bruteforce chess algorithm and was thinking about gpgpu computing. This seems to be the easiest way but my application probably isn’t suitable for this. The algorithm mainly has simple calculations and lots of arraylist lookups. Also making it multi-threaded (more than 1000 threads) would be quite difficult.

Yep, parallelizing a chess algorithm sounds like a good challenge 😉

But there’s also lots of papers on it on google

These are no longer valid. Non of the rootbeer’s examples are working except the `ArrayMultApp`. The methods like `runAll()` are no longer available too.